Mike C. starting his session on Machine Learning and Artificial Intelligence #AcumaticaDevCon

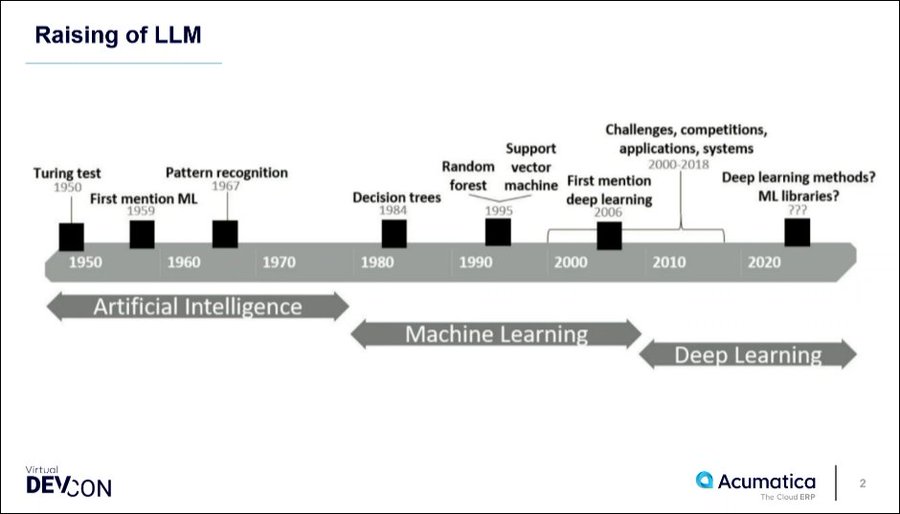

Here’s a slide talking about Artificial Intelligence and Machine Learning. Before 2020 with ChatGPT, there wasn’t much that could apply to ERP. #AcumaticaDevCon

To drive the ChatGPT car, you don’t need to know the internals of how the engine works. You just need to know what you can do with ChatGPT. #AcumaticaDevCon

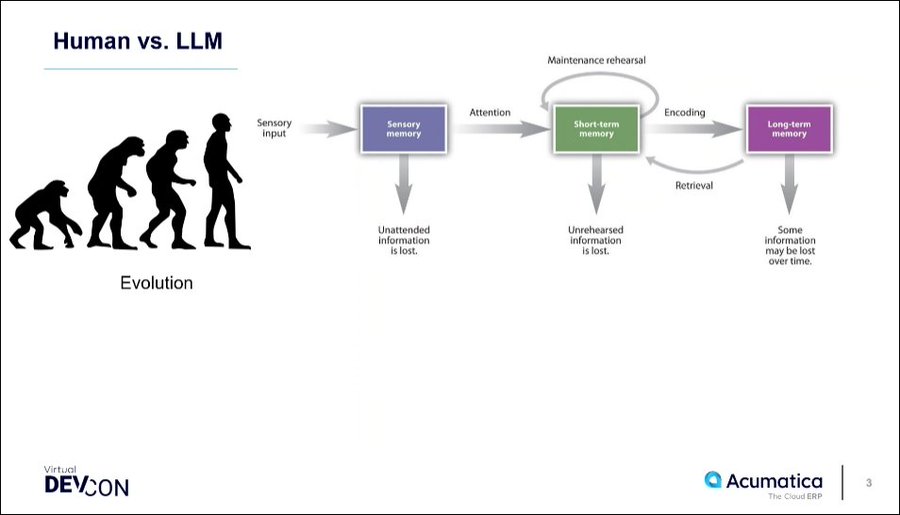

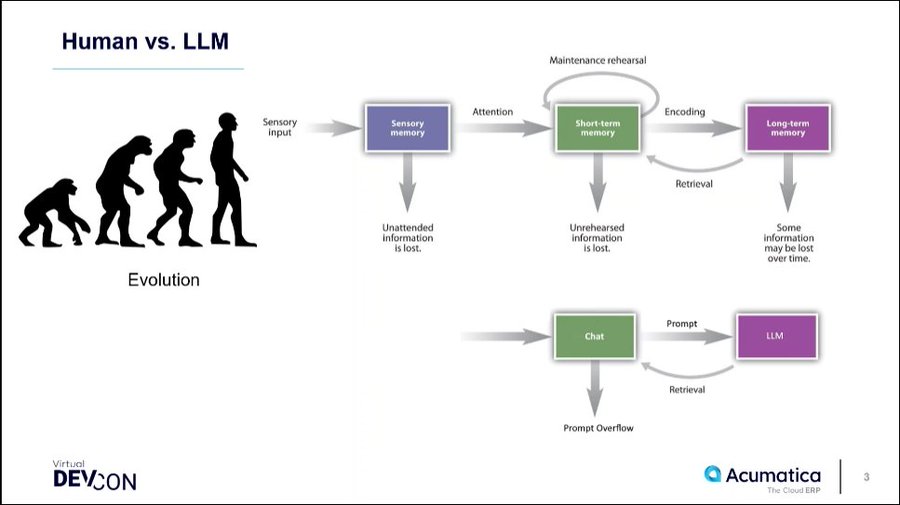

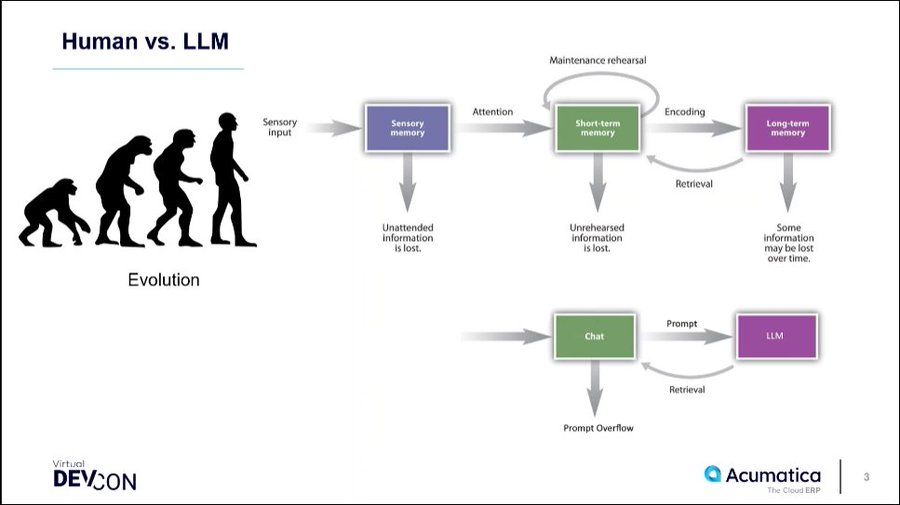

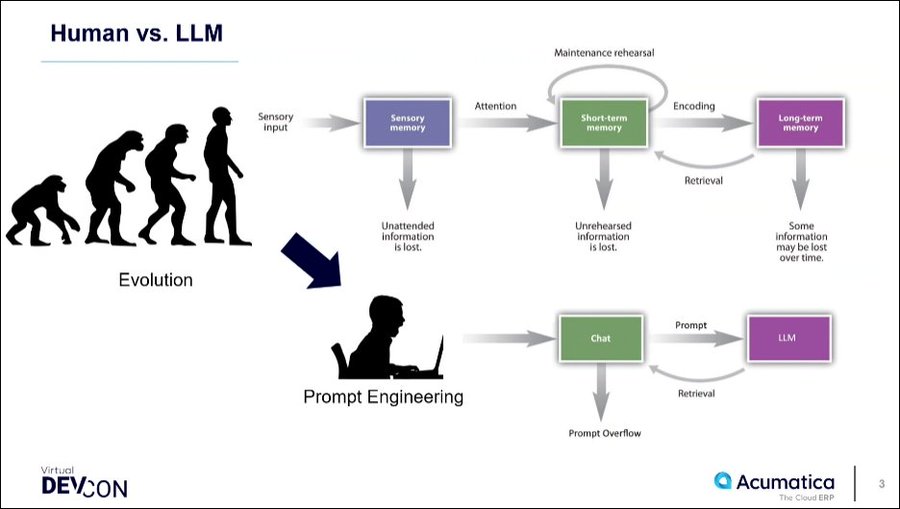

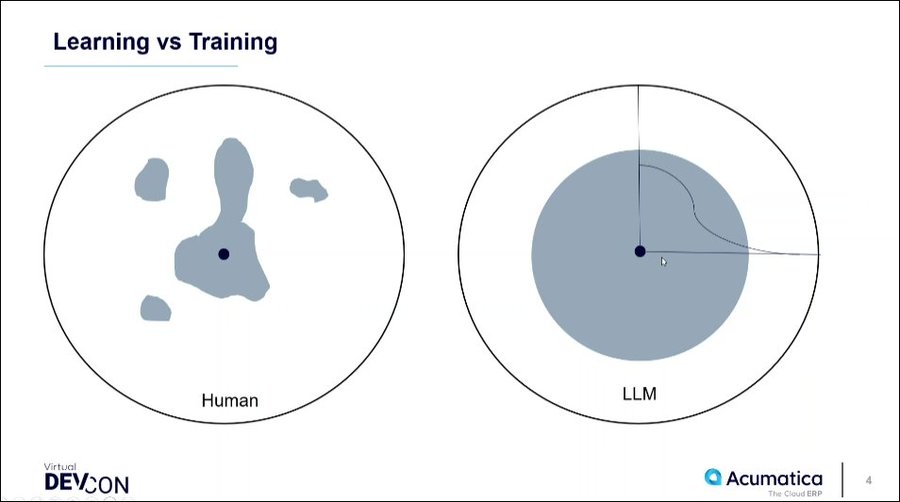

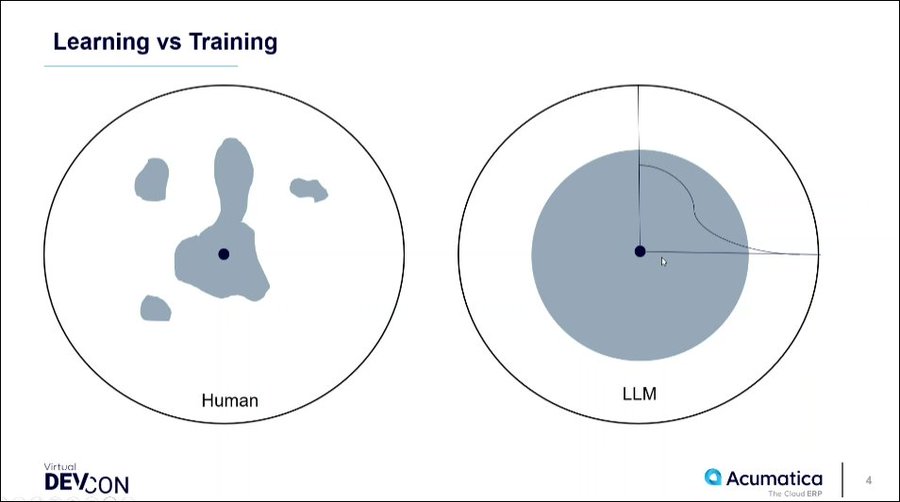

How the human brain works is the result of a long evolutionary process #AcumaticaDevCon

Interacting with an LLM in the Chat window is similar to how our Short-term memory works. The LLM itself is similar to how our Long-term memory works. #AcumaticaDevCon

A difference between our Short-term memory and an LLM Chat window is that the logical reasoning is supplied by our interaction with the chat window rather than the LLM itself. I guess it’s that the LLM doesn’t really have a Short-term memory? #AcumaticaDevCon

So we humans are still needed for Prompt Engineering #AcumaticaDevCon

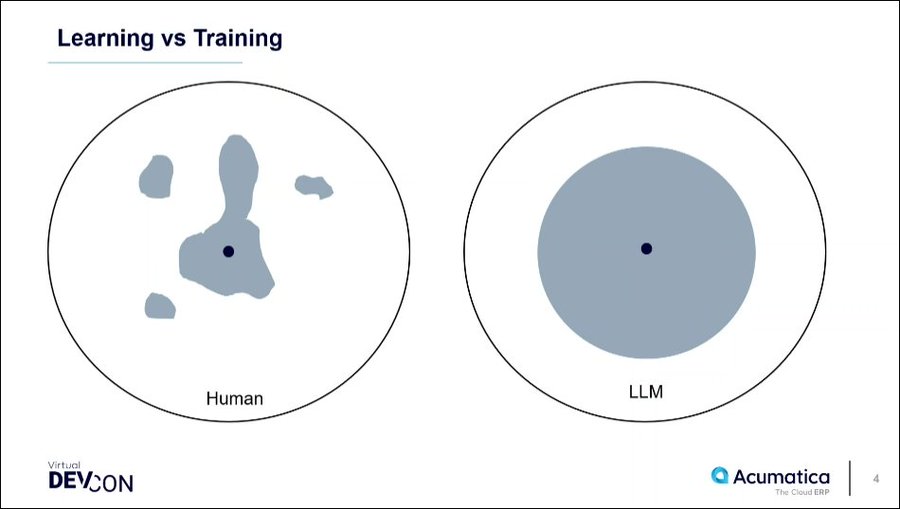

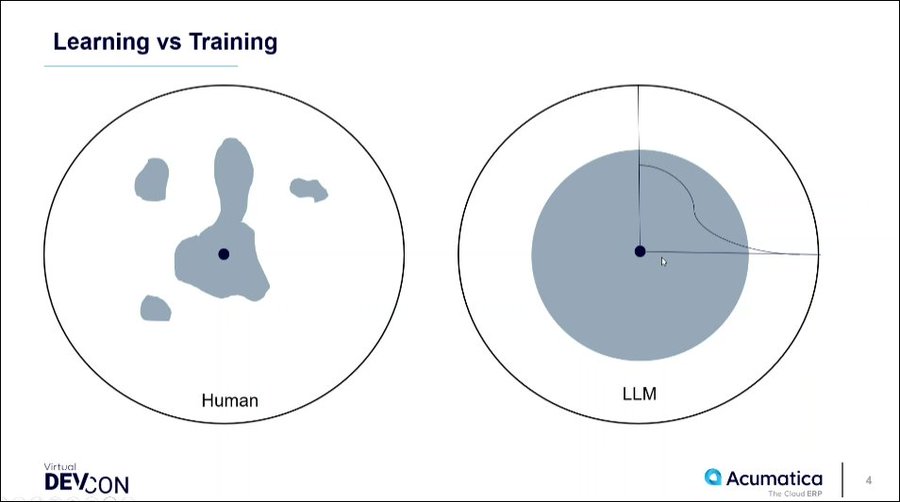

The LLM base of knowledge is more static than the human base of knowledge #AcumaticaDevCon

Y axis is density and X axis is how much information is available. LLM knowledge is more dense for topics where a lot of info is available. #AcumaticaDevCon

LLM is more of an average of common opinion rather than prioritizing information that it trusts or common sense #AcumaticaDevCon

If you ask LLM a question where a human has a blind spot, as least the LLM will give you a starting point that can be used for researching like a Google search. #AcumaticaDevCon

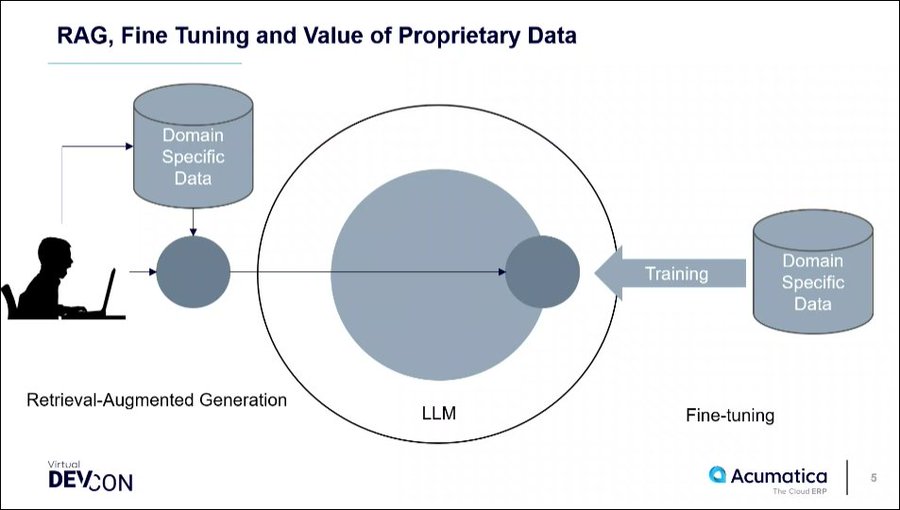

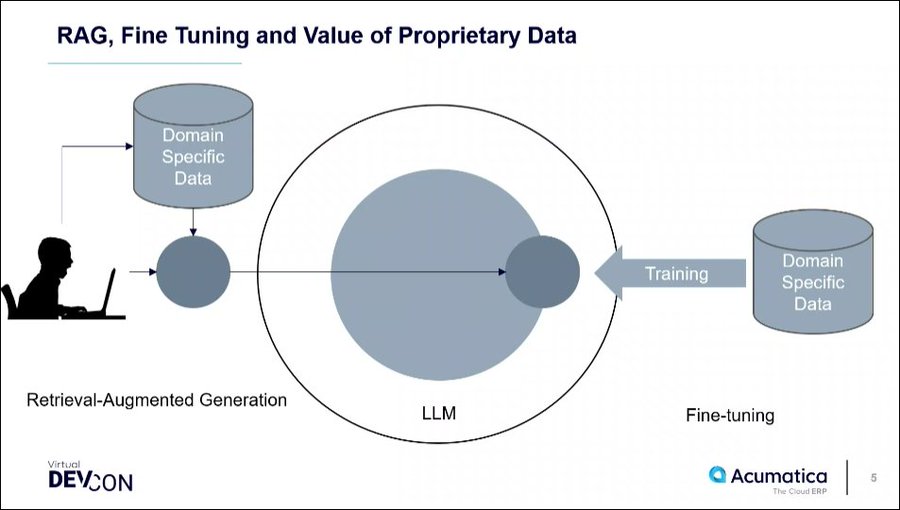

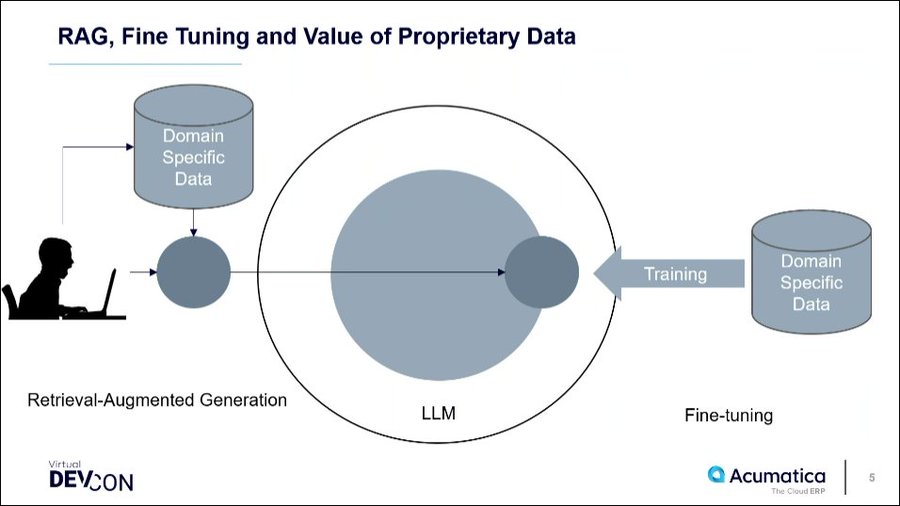

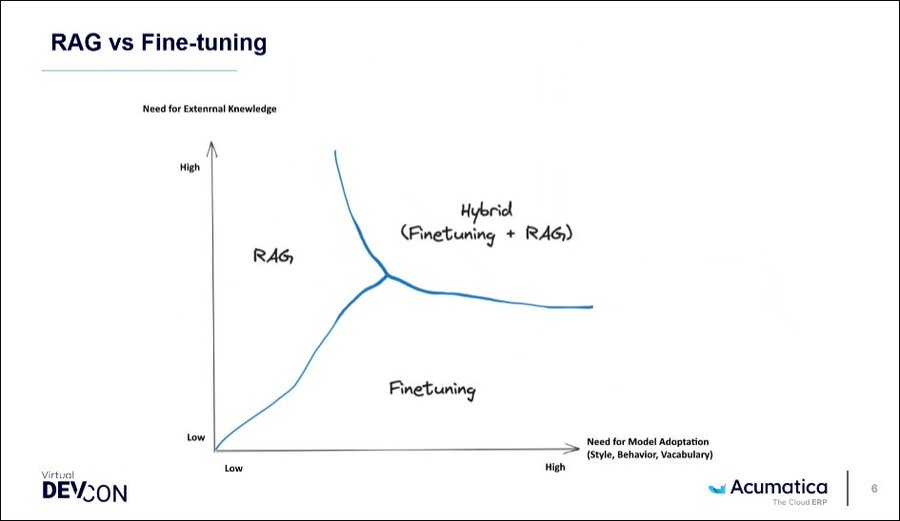

To help an LLM in an area where there isn’t a large density of information, there are two techniques: 1. Fine Tuning Disadvantage – Expensive and can’t be done in real-time #AcumaticaDevCon

2. Retrieval-Augmented Generation: The human changes the prompts to get the most out of the LLM #AcumaticaDevCon

Fine Tuning is better for building Vertical LLMs for domain specific stuff #AcumaticaDevCon

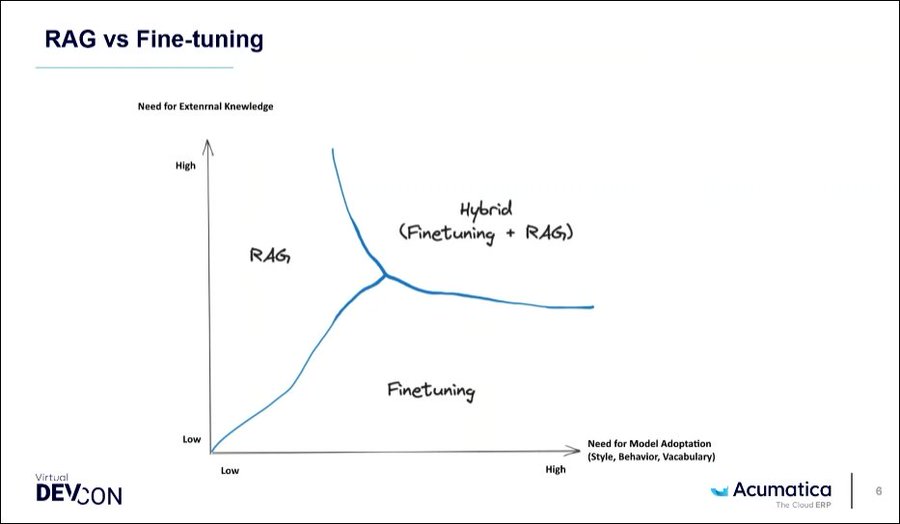

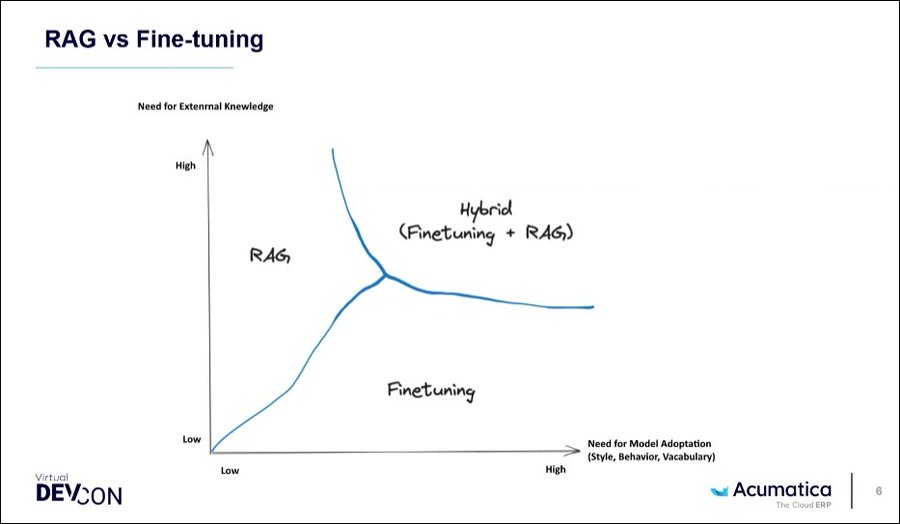

This is when to use Fine Tuning vs. RAG #AcumaticaDevCon

These days, it’s not so much about understanding the details of how an LLM works, but more about applying RAG or Fine Tuning #AcumaticaDevCon

Building an LLM is VERY expensive so there will only be a few players (Open AI, Microsoft, Google, etc.), but the LLM will become a commodity that companies like Acumatica will leverage #AcumaticaDevCon

This session is Mike’s attempt to explain high-level about how planning to leverage modern LLM technology #AcumaticaDevCon

LLM will not replace Traditional ML, but, rather, LLM will complement Traditional ML #AcumaticaDevCon

Here are situations where Traditional Machine Learning methods will continue to be better than LLM #AcumaticaDevCon

Mike anticipates SLMs (Small Language Models) which will leverage an LLM, but for a specific domain. #AcumaticaDevCon

Machine Learning is good at a vertical solution. It’s difficult to come up with a horizontal solution. But an LLM can sit on top of the Machine Learning pipelines and manage them. #AcumaticaDevCon

Mike thinks that the bulk of monetary investment these days is going into the area of Introduction of Reasoning #AcumaticaDevCon

Agent Interaction means that the LLM has multiple “libraries” available at its finger tips that it can call to get back calculation results #AcumaticaDevCon

More Efficient Hardware will eventually enable AI General Intelligence (AGI). It’s not a question of if, but a question of when #AcumaticaDevCon

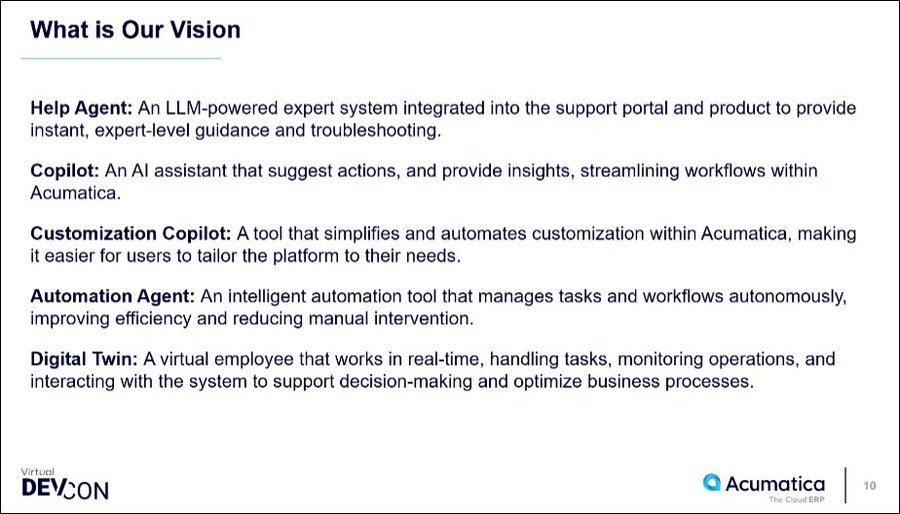

What is Acumatica’s vision for applying AI. Help Agent will be like a much better knowledge base #AcumaticaDevCon

Copilot will make building Customization Projects easier #AcumaticaDevCon

The Digital Twin idea looks interesting #AcumaticaDevCon

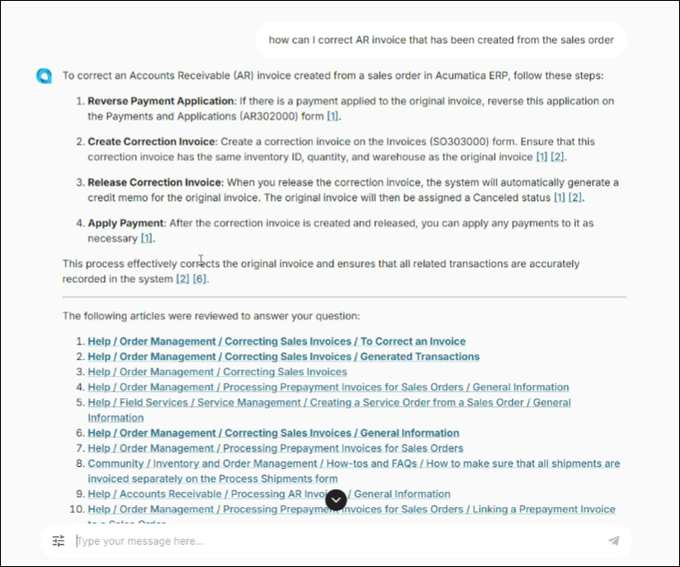

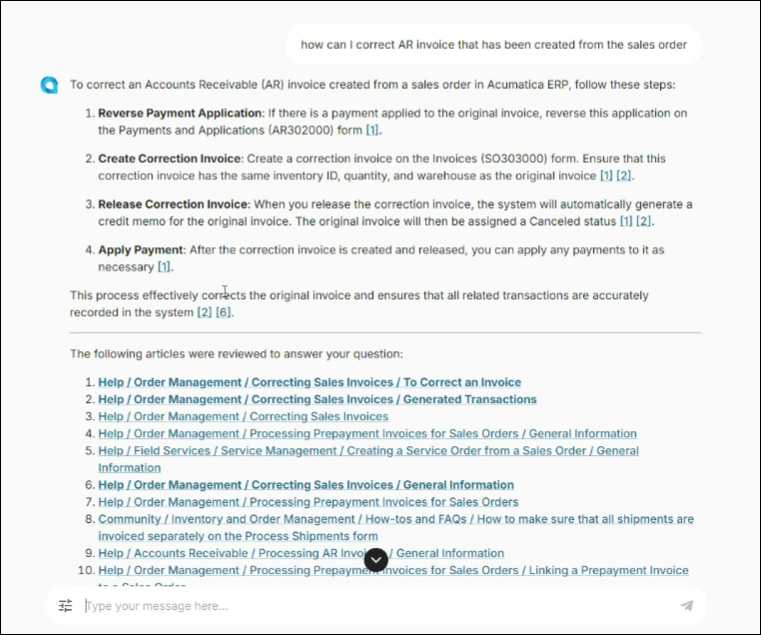

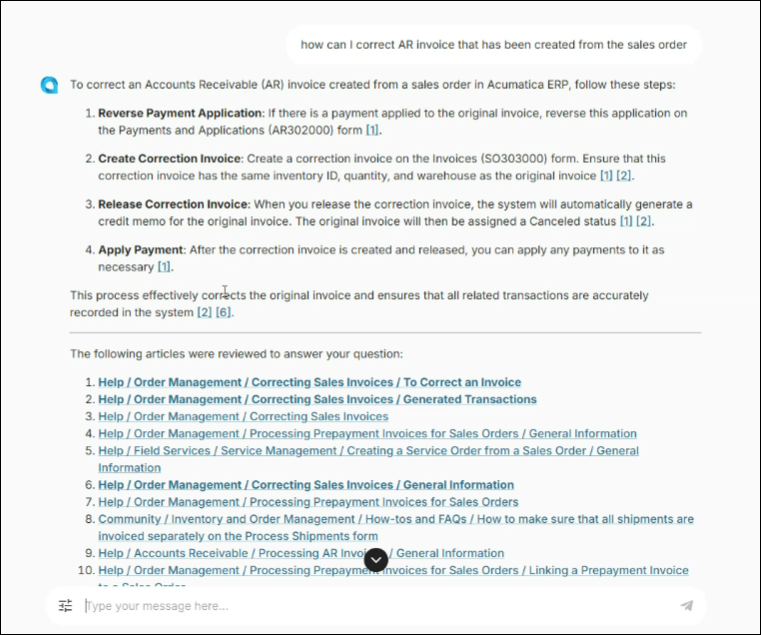

Here’s a prototype of something that’s currently called HelpGPT which might get released publicly sometime in 2025 #AcumaticaDevCon

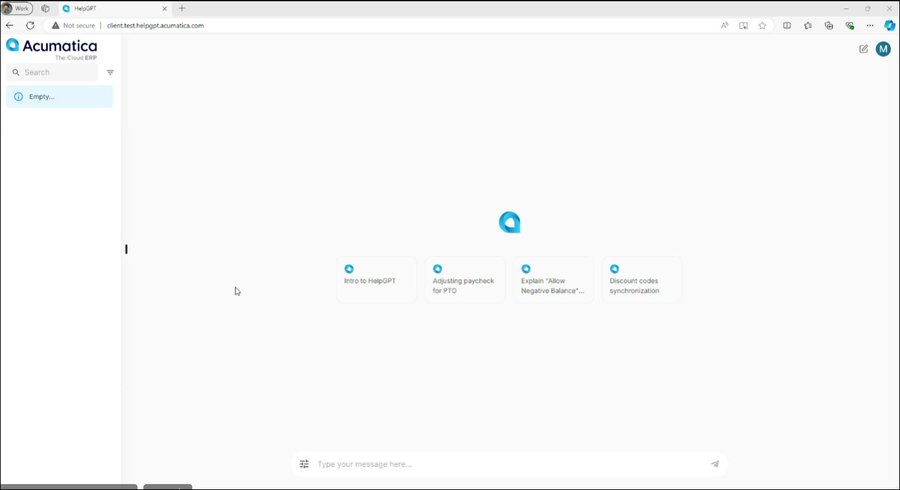

This is basically ChatGPT, but for Acumatica data #AcumaticaDevCon

This is basically ChatGPT, but for Acumatica data #AcumaticaDevCon

HelpGPT actually uses 3 different LLMs (including ChatGPT) on the backend, with 15-16 interactions with those LLMs when you ask a question. Very interesting. #AcumaticaDevCon

They will also come up with an agent that can be plugged into something like ChatGPT and called as an API to get answers to questions about Acumatica #AcumaticaDevCon

Acumatica is already doing this internally, with varying results. Sometimes it’s good and sometimes it’s not. #AcumaticaDevCon

HelpGPT probably wouldn’t be hosted in the Acumatica hosting environment. Probably hosted somewhere else. #AcumaticaDevCon